GPT-5's debut is slop; Will AI cause the next depression? Harvard prof warns of alien invasion; Alpha School & homeschool heroes

Desiderata #37

The Desiderata series is a regular roundup of links and thoughts for paid subscribers, and an open thread for the community.

Contents:

GPT-5’s debut is slop.

10% of all human experience took place since the year 2000.

Education is a mirror. What’s Alpha School’s reflection?

The rise of the secular homeschool superheroes.

“The Cheese that Gives you Nightmares.”

Avi Loeb at Harvard warns of alien invasion.

Moths as celestial navigators.

Will AI cause the next depression?

From the archives.

Comment, share anything, ask anything.

1. GPT-5’s debut is slop.

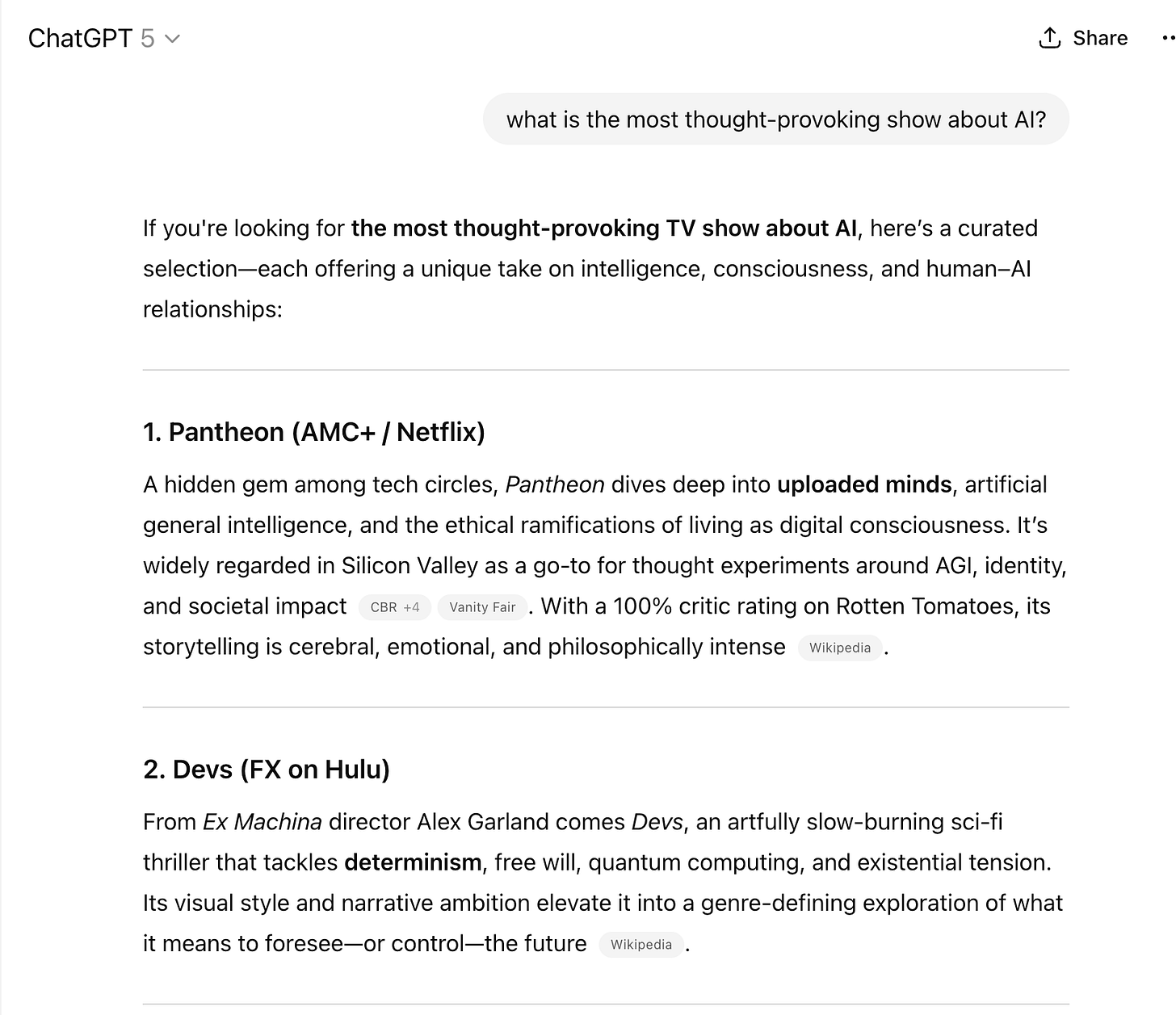

GPT-5’s launch is imminent. Likely tomorrow. We also have the first confirmed example of an output known for sure to be from GPT-5, which was shared by Sam Altman himself as a screenshot on social media. He asked GPT-5 “what is the most thought-provoking show about AI?”

Hmmm.

Hmmmmmmmmm.

Yeah, so #2 is a slop answer, no?

Maybe even arguably a hallucination. Certainly, that #2 recommendation, the TV show Devs, does initially seem like a good answer to Altman’s question, in that it is “prestige sci-fi” and an overall high-quality show. But I’ve seen Devs. I’d recommend it myself, in fact (streaming on Hulu). Here’s the thing: Devs is not a sci-fi show about AI! In no way, shape, or form, is it a show about AI. In fact, it’s refreshing how not about AI it is. Instead, it’s a show about quantum physics, free will, and determinism. This is the main techno-macguffin of Devs: a big honking quantum computer.

As far as I can remember, the only brief mention of AI is how, in the first episode, the main protagonist of that episode is recruited away from an internal AI division of the company to go work on this new quantum computing project. Now, what’s interesting is that GPT-5 does summarize the show appropriately as being about determinism and free will and existential tension (and, by implication, not about AI). But its correct summary makes its error of including Devs on the list almost worse, because it shows off the same inability to self-correct that LLMs have struggled with for years now. GPT-5 doesn’t catch the logical inconsistency of giving a not-AI-based description of a TV show, despite being specifically asked for AI-based TV shows (there’s not even a “This isn’t about AI, but it’s a high-quality show about related subjects like…”). Meaning that this output, the very first I’ve seen from GPT-5, feels extremely LLM-ish, falling into all the old traps. Its fundamental nature has not changed.

This is why people still call it a “stochastic parrot” or “autocomplete,” and it’s also why such criticisms, even though weaker in strength, can’t be entirely dismissed. Even at GPT-5’s incredible level of ability, its fundamental nature is still that of autocompleting conversations. In turn, autocompleting conversations leads to slop, exactly like giving Devs as a recommendation here. GPT-5 is secretly answering not Altman’s question, but a different question entirely: when autocompleting a conversation about sci-fi shows and recommendations, what common answers crop up? Well, Devs often crops up, so let’s list Devs here.

Judge GPT-5’s output by honest standards. If a human said to me “There’s this great sci-fi show about AI, you should check it out, it’s called Devs,” and then I went and watched Devs, I would spend the entire time waiting for the AI plot twist to make an appearance. At the series end, when the credits rolled, I would be 100% certain that person was an idiot.

2. 10% of all summed human experience took place since the year 2000.

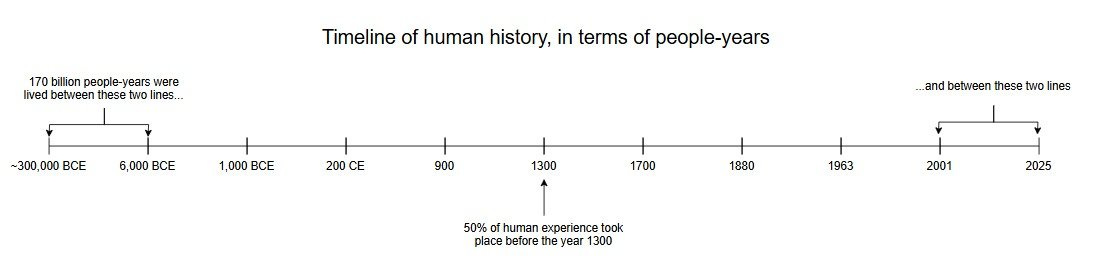

According to a calculation by blogger Luke Eure, 50% of human experience (total experience hours by “modern humans”) has taken place after 1300 AD.

Which would mean that 10% of collective human experience has occurred since the year 2000! It also means that most of us now alive will live, or have lived, alongside a surprisingly large chunk of when things are happening (at least, from the intrinsic perspective).

3. Education is a mirror. What’s Alpha School’s reflection?

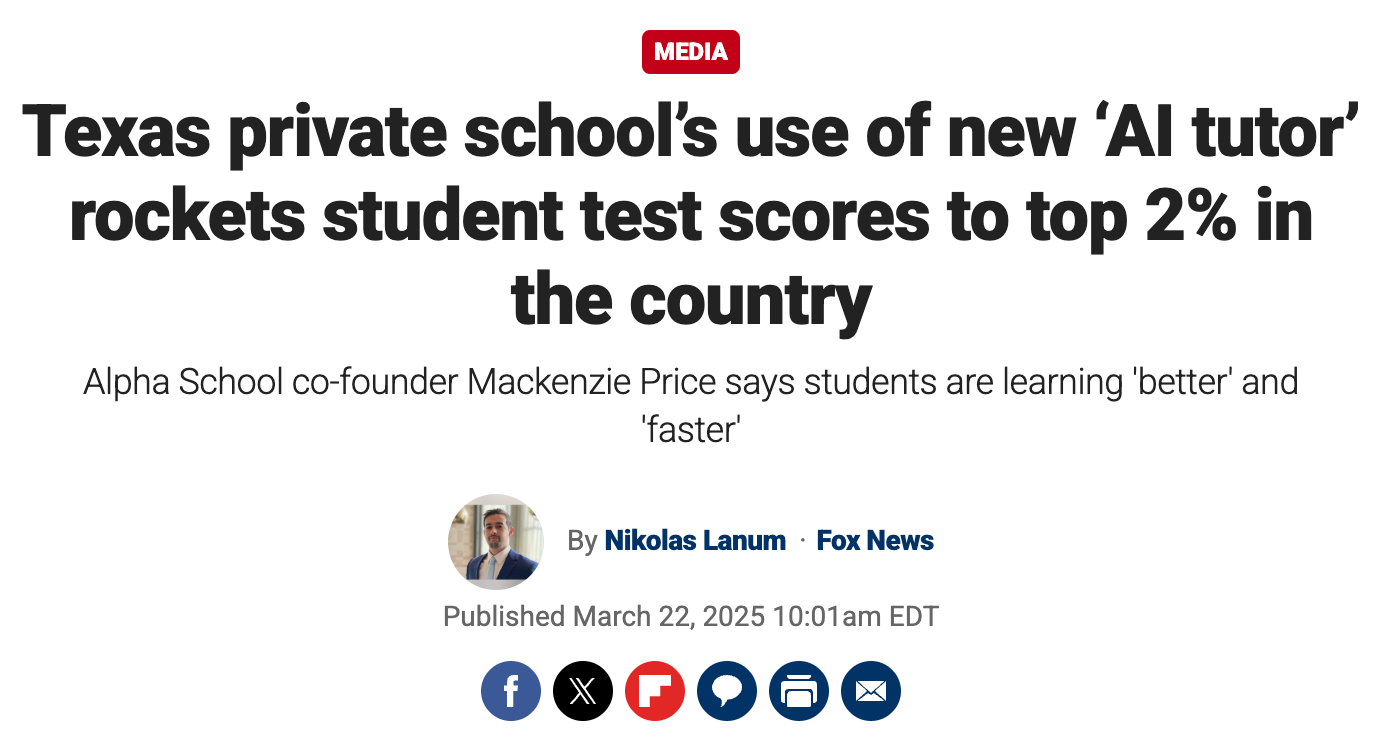

In the education space, the buzz right now is around Alpha School. Their pitch (covered widely in the media) is that they do 2 hours of learning a day with an “AI tutor.”

More recently, The New York Times profiled them:

At Alpha’s flagship, students spend a total of just two hours a day on subjects like reading and math, using A.I.-driven software. The remaining hours rely on A.I. and an adult “guide,” not a teacher, to help students develop practical skills in areas such as entrepreneurship, public speaking and financial literacy.

I’ll say upfront: I do believe that 2 hours of learning a day, if done well, could be enough for an education. I too think kids should have way more free time than they do. So there is something to the model of “2 hours and done” that I think is attractive.

But I have some questions, as I was one of the few actual attendees to the first “Alpha Anywhere” live info session, which revealed details of how their new program for homeschoolers works. Having seen more of it, Alpha School appears based on progressing through pre-set educational apps, and doesn’t primarily involve AI-as-tutor-qua-tutor often (i.e., interacting primarily with an AI like ChatGPT). While the Times says that

But Alpha isn’t using A.I. as a tutor or a supplement. It is the school’s primary educational driver to move students through academic content.

all I saw was one use case, which was AI basically making adaptive reading comprehension tests on the fly (I think that specifically is actually a bad idea, and it looked like reading boring LLM slop to me).

For this reason, the more realistic story behind Alpha School is not “Wow, this school is using AI to get such great results!” but rather that Alpha School is “education app stacking” and there are finally good enough, and in-depth enough, educational apps to cover most of the high school curriculum in a high-quality and interactive way. That’s a big and important change! E.g., consider this homeschooling mom, who points out that she was basically replicating what Alpha School is doing by using a similar set of education apps.

Most importantly, and likely controversially, Alpha School pays the students to progress through the apps via an internal currency that can be redeemed for goodies (oddly, this detail is left out from the analysis of places like the Times—but hey, it’s “the paper of record,” right?).

My thoughts are two-fold. First, I do think it’s true that ed-apps have gotten good enough to replace a lot of the core curriculum and allow for remarkable acceleration. Second, I think it’s a mistake to separate the guides from the learning itself. That is, it appears the actual academics at Alpha School are self-contained, as if in a box; there’s a firewall between the intellectual environment of the school and what’s actually being learned during those 2 hours on the apps. Not to say that’s bad for all kids! Plenty of kids ultimately are interested in things beyond academics, and sequestering the academics “in a box” isn’t necessarily bad for them.

However, it’s inevitable that this disconnect makes the academics fundamentally perfunctory (to be fair, this is true for a lot of traditional schools as well). As I once wrote about the importance of human tutors:

Serious learning is socio-intellectual. Even if the intellectual part were to ever get fully covered by AI one day, the “socio” part cannot… just like how great companies often have an irreducibly great culture, so does intellectual progress, education, and advancement have an irreducible social component.

Now, I’m sure that Alpha School has a socio-intellectual culture! It’s just that the culture doesn’t appear to be about the actual academics learned during those 2 hours. And that matters for what the kids work on and find interesting themselves. E.g., in the Times we get an example of student projects like “a chatbot that offers dating advice,” and in Fox News another example was an “AI dating coach for teenagers,” and one of the cited recent accolades of Alpha School students is placing 2nd in some new high school competition, the Global AI Debates.

At least in terms of the public examples, a lot of the most impressive academic/intellectual successes of the kids at Alpha School appear to involve AI. Why? Because the people running Alpha School are most interested in AI!

And now apply that to everything: that’s true for math, and literature, and science, and philosophy. So then you can see the problem: the disconnect between the role models and the academics. If the Alpha School guides and staff don’t really care about math—if it’s just a hurdle to be overcome, just another hoop to jump through—why should the kids?

Want to know why education is hard? Harder than almost anything in the world? It’s not that education doesn’t work. Rather, the problem is that it works too well.

Education is a mirror.