I said no to $20,000 because writers must take a moral stand on AI

And yet so many others said yes

With the news having been broken that around 10% of Substacks have some AI-generated content in them (and probably plenty of bigger outlets as well, this problem isn’t specific to Substack) I’d like to tell a tale.

It began earlier this year, when someone I respect and like reached out to me. Their offer? Do a book club version of a Master Class (get interviewed, write some commentary) about any book I want. The payment—notably high since it required only a few filming sessions and a little writing—was a hefty $20,000.

Truth be told, I don’t quite make enough money here on Substack yet to be financially on budget. I make tantalizing close enough, enough to be the main support for my family, and I cobble together the rest through other means, like the occasional book deal or speaking fees, and also from being a surprisingly savvy (or perhaps just lucky) investor. Thanksgiving is coming up, so I’ll note that I’m lucky in general to make as much as I do from writing and thinking. We all choose what to maximize in our lives and careers, and I made a conscious choice to have quiet flexible time for reading and research and writing and being a parent, rather than trying to make a lot of money, which is why I moved out to Cape Cod. Kurt Vonnegut also moved out to Cape Cod when he started writing full-time (he wrote Slaughterhouse Five just a town over from me) but he had to moonlight at a Saab dealership. I don’t have to do that, so I’m lucky.

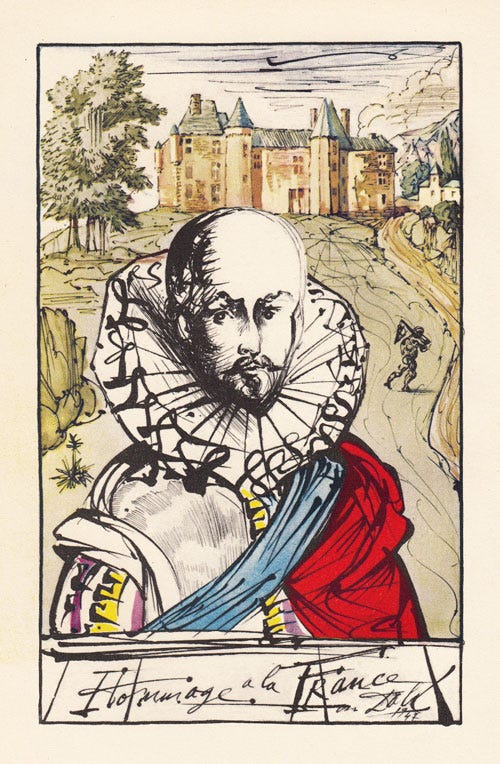

All to say, an easy $20,000 is not nothing for me. Additionally, I was excited by the idea of a book club, for it immediately popped into my head to do it on Michel de Montaigne.

In the late 1500s Michel de Montaigne became the delight of the Parisian aristocracy with his Essais; he was the first to truly popularize the essay as a literary form, and, through his musings on daily life, became one of the great practical philosophers of the Renaissance. One might go so far as to say Montaigne invented the modern narrative consciousness you recognize in contemporary writing: that searching, doubtful, analytic voice. My voice, like so many other writers, is some linguistic evolutionary descendant of his voice.

But while I was initially excited, there was another aspect of the project: I learned that this book club would be with not me, but rather an AI version of me; one based on a bit of original commentary I created about the book (not a very long précis, in my understanding), then repackaged through a chatbot.

Furthermore, the app possessed something else I couldn’t get onboard with: the option to use AI to translate the text into “contemporary versions” that are supposedly more easily digestible. In other words, to rewrite the classics to be more modern, which I guess means simpler. Shorter. Keep in mind: Shakespeare is an option. What, precisely, is an “easier to digest” Shakespeare version? I have little confidence that an AI-simplified version of a classic contains the same nuances, the same emphasis of style. That doesn’t seem in line with the original author’s intent, and I am an author, and I feel I must respect the dead.

To the company’s credit, they are upfront about the AI usage. I’m not here to besmirch their intentions. But it struck me as antithetical to the point of a book club—both the contemporary translation aspect, as well as the AI-likeness.

Now, I’m no great Montaigne scholar. I’ve read a biography, and I occasionally return to him just to drink deep from that original well. In a real bookclub, I would talk about Montaigne and newsletters, and what the essay meant then and what it means now, and why I think of Montaigne as one of the great first champions of agnosticism, and how this agonistic uncertainty was at the heart of the rise of humanism and even democracy, and how his brand of agnosticism is ultimately what essays should aspire to; how they should be a consideration of a thing, a turning over of an idea like you are a baby physical handling an object for the first time. I would say all that, and maybe I’d be correct, or maybe not, but I’d be me. There’d be a social connection too, hearing the experience of what Montaigne triggered in others, what they themselves thought, or brought to the table, or how they read him differently.

But with this proposal, I couldn’t get over the thought that I would not only be expanding amount of AI-generated slop in our culture, but essentially selling the AI-girlfriend experience of a book club. You wouldn’t be reading Montaigne with me. You’d be reading Montaigne with some ersatz knockoff. Already porn stars are doing this: prompting an AI on their sexts and images and selling them; in fact, apparently companies now reach out to non-porn-associated people (like those with large Twitter followings) asking if they can make a NSFW version of them in return for payment. Of course, the AI girlfriend you’d be buying in this particular case would be based on a balding middle-aged man who lives on Cape Cod. But hey, at least the conversation is good, right? And yes, obviously there’s a higher-minded purpose for an AI-based book club, compared to the smutty nothings of an AI girlfriend. But is the content the only thing that matters? It would still be an illusion of intellectual connection. You’d not really be reading the book with me as a “companion,” nor having a “conversation,” nor having a “discussion”—which is how it was described. No, the personalities of any AI clone are very much still that same bland butler, that same Reddit-commentator voice, but given a thin veneer of outside personality and someone else’s portrait in the chat window. It’s just putting lipstick on ChatGPT. The real thing shines through. In other words, even with all other considerations aside, at this stage in the tech the illusion of connection—even if it’s known explicitly by the consumer—necessarily is the product. And I’m not comfortable with that.

So I said no to the $20,000. Meanwhile, a host of famous and successful authors, names you assuredly know, all said yes. Here are the only two people who said no: Me, and Andre Dubus III.

(For those who don’t know, Andre Dubus III is the author of House of Sand and Fog, among many other wonderful books like his memoir Townie. He’s a great writing teacher, an entrancing public speaker, and happens to be one of the most stand-up ethical people I know. Andre hosted the book launch party for my first novel, The Revelations, and was my first—and last—writing instructor, back when I was a young boy. Our refusals were not coordinated, merely a coincidence stemming from a shared Yankee stubbornness.)

I know many people will say they don't care if I sell an AI-likeness or support AI-rewrites of classics; they may even think me prudish at the refusal. That’s fine. I had to make a choice that I could stand behind, and one way to reason about ethics is to imagine the kind of world that you'd like to exist, and the world that I want to exist has more grounded notions of authenticity and more careful use of such a powerful technology. Even if something is a moral gray zone now, what is the directionality of the choice? Something like Taylor Swift selling an AI-likeness for $9.99 a month to teenage girls. No thanks, our culture is already solipsistic enough. And I'll point something else out. Even if I’m wrong, and selling your AI-likeness is fine and dandy, and AI-rewritten classics are just par for the course in the future, I’m still shocked that 95% of the writers asked said yes. For I don't think that this is a 95% clear issue, even if I'm personally wrong about it. Many of the yeses self-style themselves not only as writers, but important voices of morality and ethics—including about AI’s threat to human art! Yet none of that showed through.

The incentives here, near the peak of AI hype, are going to be the same as they were for NFTs. Remember when celebrities regularly shilled low-market-cap cryptos to the public? Why? Because they simply couldn’t say no to the money.

Please note: I don’t think all use of AI is morally dubious! That’s not what I’m saying and not what I believe. There are a myriad of things I’m excited by, like how AI can help us understand whale speech or how proteins fold or better target transcranial ultrasound. In terms of LLMs and chatbots, I think they can be used in education to extreme effectiveness. They’re great for voice dictation, and far better than Google as a first-stage for research and search—I use them for that myself! I don’t begrudge anyone who uses AI as a sounding board for their ideas, nor to get feedback on their writing, nor to query at whatever springs into their heads, nor those who use it to overcome the professional disadvantage of English as a second language. But the second you click “generate draft” and one appears like magic, no matter how much prior fine-tuning or prompting you’ve done—that’s not your writing nor your speech nor your commentary and certainly not your side of a discussion. And I don’t want our civilization’s culture to be produced by ChatGPT with lipstick on. I think it’s sad and depressing and sort of the end of the story of human creativity. Even if the products at first seems of equal ability, I think there are very negative longterm effects downstream.

As with any new technology, we all have to take a stand, and mine is that I will never feed you AI content slop under my name, be it via a chatbot-wrapper with my photo on it on some other platform, nor here on Substack, nor in my books. My writing will always be the authentic me, the one that is sometimes wrong, the one that occasionally fucks up links, the one who makes mistakes, the one who once wrote “yolk” when he meant “yoke” and everyone got in a pedantic huff, the one that never manages to write a post under 1,500 words; but the one that is trying, really trying, to produce stuff that’s authentic and artistically and intellectually worth your time. I believe the broadcast-based connection between the consciousness of the writer and the reader is a sacred trust, I’m honored it exists between me and so many people, and I don’t plan on swapping it out with an artificial replacement.

So with that tale told, consider this my annual plea to become a paying subscriber of The Intrinsic Perspective to support this choice—and if not to me, then to your other favorite writers/artists/thinkers/creators. Because there are going to be wild, absolutely wild, financial incentives to use AI to scale content creation in ways that slip easily, thoughtlessly, into the harmful and illusory. Most of the big outlets will do it, if they haven’t started already. Subscriptions and connections to real humans you trust are the only way to combat it. We will be what remains of the internet when this is all over.

Just want to say:

1. Can you imagine the experience of buying a Saab from Kurt Vonnegut?

2. That’s definitely the kind of anecdote you only get from a human writer

Bravo. Authenticity rules and phoniness rules. In a world where the dignity of the human person continues to diminish, it’s refreshing to see a stand for humanity.

My hope is, as it pertains to the arts, that just like individuals pay more for organic food raised without pesticides, so too will readers for art made by living, breathing human beings.