The executive order Trump should sign: AI watermarks

Regulation that's politically neutral and technologically feasible

Since taking office, President Trump has already signed over 200 executive orders, which is almost as many as in the average presidential term. Critically, this included one repealing Joe Biden's own previous executive order regulating the AI industry.

That original executive order was signed by Biden in 2023, mostly to ensure AI outputs did not violate civil rights or privacy. Republicans argued it forced companies to make AI's political compass more left-wing, pointing to analysis showing most AIs aren’t politically neutral, but rather lean left in the valence of their responses and what topics they consider verboten.

Its repeal means there is essentially no significant nationwide regulation of AI in America as of now.

In a further boon for the industry, Sam Altman visited the White House to announce, alongside President Trump, the construction of a 500-billion-dollar compute center for OpenAI, dubbed “Stargate.” The promise of far-away and speculative rewards like “AI will make a vaccine for cancer” were floated.

Yet there are many reasonable politically-neutral voices, including Nobel laureate Geoffery Hinton (the “Godfather of AI”) who are worried about how the technology might, if left completely unchecked, erode how culture gets created, damage public trust, be used maliciously, and possibly even pose (one day) significant global safety risks.

So what should replace Biden’s expunged order? How should AI be regulated?

I think there is a way, one implementable immediately, with high upside and zero downside: ordering that AI outputs be robustly “watermarked” such that they’re always detectable. This is an especially sensible target for governmental mandate because such watermarking, in its technical details, requires the cooperation of the companies to work.

Specifically, what should be mandated is baking in subtle statistical patterns into the next-word choices that an AI makes. This can be done in ways freely available to open-source developers that aren't noticeable to a user and don’t affect capabilities, but still create a hidden signal that can be checked by some provided detector that has inside knowledge into how the word choices are being subtly warped (this works for code and images too).

Mandating watermarking does not tell companies how to create AI, nor does it create a substantial burden that ensures a monopoly by incumbents, since the techniques for watermarking are known, low-cost, and even open-sourced. It is regulation that is pro-freedom in that it does not place guardrails or force any political views. But it is pro-humanity in that it makes sure that if AI is used, it is detectable.

Here are four reasons why it would benefit the nation to watermark AI outputs, and how robust watermarking methods could be implemented basically tomorrow.

Watermarking keeps America a meritocracy

January is ending. Students are going back to school, and therefore, once again ChatGPT has gained back a big chunk of its user base.

With 89% of students using ChatGPT in some capacity (the other 11% use Claude, presumably) AI has caused a crisis within academia, since at this point everything except closed-laptop tests can be generated at clicks of a button. Sure, there's the potential to use AI as an effective tutor—which is great, and we shouldn’t deny students that—but the line between positive usage versus academic cheating (like making it do the homework) is incredibly slippery.

People talk a lot about “high-trust” societies, often in the context of asking what kills high-trust societies. They debate causes from wealth inequality to immigration. But AI is sneakily also a killer of cultural trust. Academic degrees already hold less weight than they used to, but nowadays, if you didn't graduate from at least a mid-tier college, the question is forevermore: Why didn't you just let AI do the work? And if calculus homework doesn’t seem important for a high-trust society, I’ll point out that AI can pass most medical tests too. Want a crisis of competence in a decade? Let AI be an untraceable cheat code for the American meritocracy, which still mostly flows through academia.

Watermarking saves the open internet

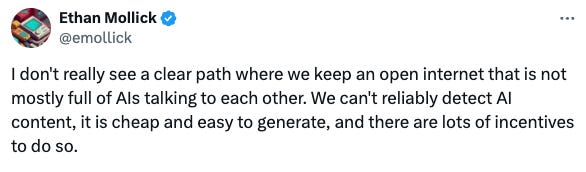

The internet is filling up with AI slop. And while the internet was never a high-trust place, at least there was the trust you were actually hearing from real people. Now it could all just be bots, and you wouldn’t know.

Reminder for the new Republican government: until basically yesterday, the open internet was the only space with unrestricted freedom of speech. You won’t always hold the reins of power. That space might be important in the future, critically so, and allowing AI to pollute it into an unusable wasteland where every independent forum is swamped by undetectable bots is a dubious bet that future centralized platforms (soon necessarily-cloistered) will forever uphold the values you’d like. The open internet is a reservoir against totalitarianism from any source, and should be treated as a protected resource; watermarking ensures AI pollution is at least trackable and therefore decentralized forums for real anonymous humans can still exist.

Watermarking helps stop election rigging

Future election cycles all take place in a world in which AIs can generate comments that look and sound exactly like real people. It’s obviously a problem that’s only going to get worse. Political parties, PACs and Super PACs, and especially foreign actors, will attempt to sway elections with bots, with far greater success than before. In a world where bot usage can’t be detected, this means free interference with the public opinion of Americans in ways subtle and impactful. Americans, not AI, should be the ones who drive the cultural conversation, and therefore the ones who decide what happens next in our democracy.

Watermarking makes malicious AIs trackable

It’s a simplification to say the Republican position is pro-unrestricted AI in all contexts. Elon Musk, now close advisor to President Trump, has a long track record of being worried about the many negative downsides of AI, including existential risk. Even President Trump himself has called the technology's growing capabilities “alarming.”

AI safety may sound like a sci-fi concern, but we’re building a 500-billion-dollar compute center called “Stargate,” so it’s past time for dismissive hand-waving that malicious or rogue AI is purely sci-fi stuff. We live in a sci-fi world and have sci-fi concerns.

Many of us proponents of AI safety were shocked and disappointed when California’s AI non-political safety bill SB 1047, after making it close to the finish line, was vetoed by Gavin Newson for garbled reasons. Nancy Pelosi, Meta, Google, and OpenAI all worked against it. Its failure was because, frankly, California politicians are too influenced by the lobbying of local AI companies.

Therefore, an executive order is especially appropriate because it combats entrenched special interests in one state regarding a matter that impacts all Americans. An order on watermarking would be a massive win for AI safety—without being about AI safety explicitly. For it would mean that wherever an AI goes, even if loosed into the online wild to act independently as an agent, it would leave a statistical trace in its wake. This works for both minor scamming bots (which will become ever more common) as well as more worrisome unknowns, like AI agents bent on their own ends.

Watermarking works, companies just refuse to do their part

Often it’s wrongly said that watermarking AI outputs is impossible. What is true is that currently deployed methods throw enough false positives to be useless. But this is solely because AI companies aren't implementing the watermarking methods we do know work.

Companies don't want to implement actual watermarking because a huge amount of their traffic comes from things like academic cheating or bots or spam. Instead, they try to swap in adding easily-removable metadata as “watermarking.” For example, California currently is considering AB-3211, a bill supposedly about watermarking AI outputs, but which only requires that metadata is added (like little extra data tags, which are often removed automatically on upload anyways). This is why companies like OpenAI support AB-3211, because it’s utterly toothless.

One outlier company who has already made the move to real robust watermarking is Google, who (admirably, let’s give credit where it’s due) deployed such techniques for its Gemini models in October.

If one company does it, there’s not much effect. But if it were mandated for models of a certain size or capability, then to detect AI use would simply require going to a service that checks across a bunch of the common models by calling their respective signature detectors.

What about “paraphrasing attacks?”

A paraphrasing attack is when you take a watermarked output and run it through some other AI that doesn't have watermarked output to be paraphrased (essentially, rewritten to obscure the statistical watermark). Critics of watermarking usually point to paraphrasing attacks as a simple and unbeatable way to remove any possible watermark. This is because there are open-source models that can already do paraphrasing and can be run locally without detection.

Traditionally, this has been a killer criticism. Watermarking would still be a deterrent, of course, as paraphrasing requires an extra hurdle and adds compute-time to malicious actors. E.g., when it comes to academic cheating, most college kids who use an AI to write their essays are not going to run an open source model on their local hardware. Or if hacker groups are spamming social media with crypto bots, they’d have to run twice the amount of compute for every message, and so on.

But importantly, there now exist watermarking methods that can thwart paraphrasing attacks. The research is clear on this. E.g., in 2024, a watermarking method was introduced by Baidu that is based on semantics (rather than exact phrasing) and has since proved robust against paraphrasing attacks.

To enhance the robustness against paraphrase, we propose a semantics-based watermark framework, SemaMark. It leverages the semantics as an alternative to simple hashes of tokens since the semantic meaning of the sentences will be likely preserved under paraphrase and the watermark can remain robust.

Essentially, you keep pushing the subtle warping higher in abstraction, beyond individual words to more general things like how concepts get ordered, the meaning behind them, and so on. This makes it very hard to disguise such a higher-level signal when paraphrasing, and so is secure even against dedicated and smart paraphrasing attacks that try to reverse-engineer the watermark. In fact, there’s now a large number of advanced watermarking methods in the research literature shown to be robust under paraphrasing attacks and even further human edits. Critics of watermarking have not updated accordingly.

Of course, for any adopted paraphrasing-robust watermarking, one day there may be developed some way around it that works to some degree. But avoidance will become increasingly costly and better methods will continue to be developed, especially under a mandate to do so. Even if watermarking is never 100% preventative against motivated attackers with deep pockets and deeper technical expertise, it locks even maximally-capable malicious actors into an arms race with some of the smartest people in the world. I wouldn’t want to be in a watermark-off with OpenAI, and I doubt hackers in Russia would either.

Now that they’re firmly in power, Republicans should still regulate AI, but in a way that doesn’t interfere with the legitimate uses of this technology, nor dictates anything about the politics or capabilities of these amazing (sometimes scarily-so) models, and yet one that still ensures a fair future with humans front-and-center in politics and culture. As it should be.

This seems like such a no-brainer. What are the cons (other than "it's not enough")?

Great article.