2.3% chance an asteroid hits Earth, book blurbs finally die, and the new AI Deep Research can't find me a mattress

Desiderata #32: links and commentary

This entry in the Desiderata series is open to everyone. If you like it, please consider becoming a paid subscriber, as they’re usually locked.

Table of Contents (open to all free subscribers too):

Don’t Look Up becomes real.

Die, book blurbs, die!

Die, indirect costs for scientific grants, die!

OpenAI’s Deep Research can’t find me a good mattress.

But Nate Silver is right on the unavoidable importance of AI.

My theory of emergence cropping up in a Nature journal.

Fun Desiderata art change.

New Jersey drones confirmed to be a big nothingburger.

Ask Me Anything.

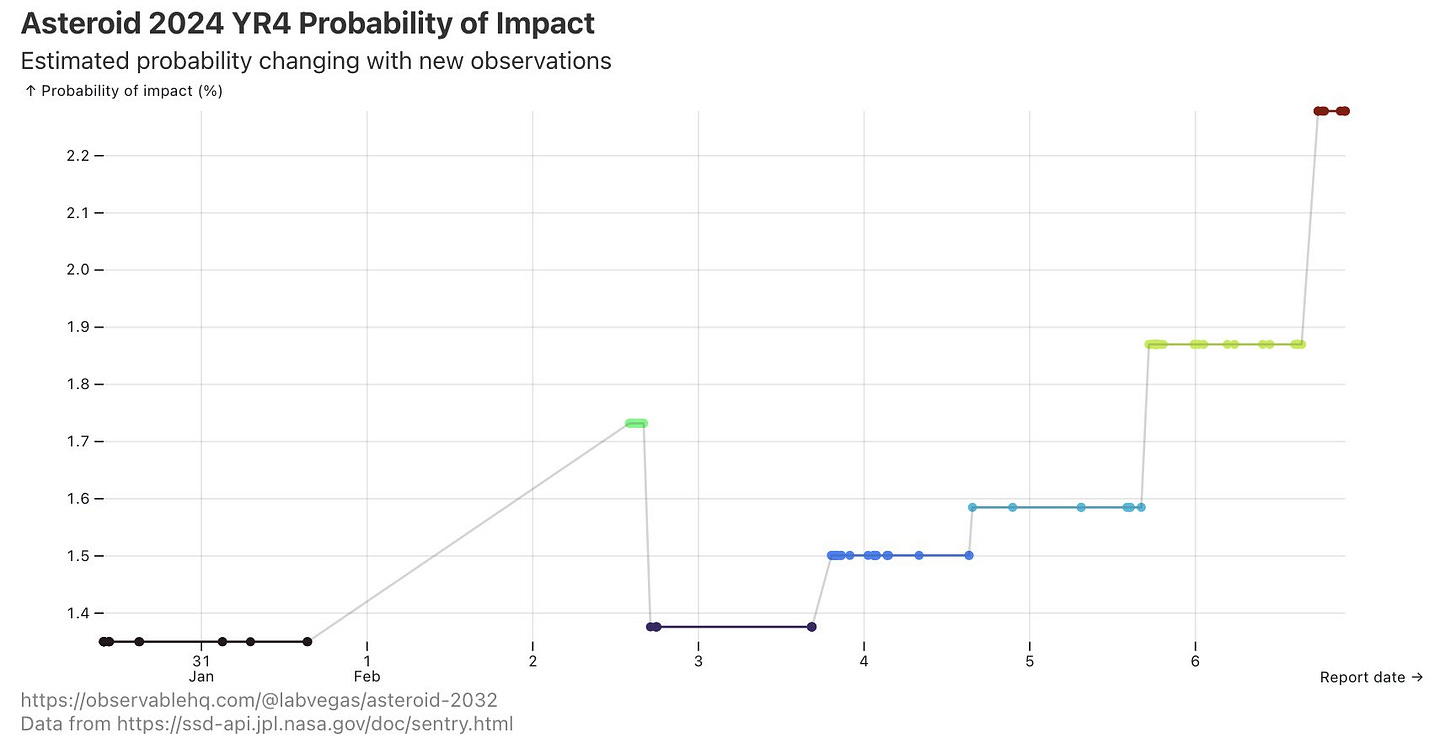

1. The asteroid named “2024 YR4” was discovered in December heading towards Earth, and it has an uncanny knack of increasing its probability of impact. After NASA was giving a 2% chance of impact in 2032, that’s now climbed to somewhere around 2.3%. Below is a real 3 second video of it passing near Earth in early January.

The asteroid’s size is about the one estimated to have caused the Tunguska event in 1908 (however, these are estimates, it could be even larger). Here’s an account of what that’s like from someone who was having breakfast about 40 miles away:

The split in the sky grew larger, and the entire northern side was covered with fire. At that moment I became so hot that I couldn't bear it as if my shirt was on fire; from the northern side, where the fire was, came strong heat. I wanted to tear off my shirt and throw it down, but then the sky shut closed, and a strong thump sounded, and I was thrown a few metres. I lost my senses for a moment, but then my wife ran out and led me to the house. After that such noise came, as if rocks were falling or cannons were firing, the Earth shook, and when I was on the ground, I pressed my head down, fearing rocks would smash it. When the sky opened up, hot wind raced between the houses, like from cannons, which left traces in the ground like pathways…

The worst case scenario is that 2024 YR4 would hit some populated area, like a city, with the force of an 8-megaton nuclear bomb (but still smaller than the largest nuclear bomb ever exploded, the Tsar bomb). It wouldn’t be world-ending, but it would still be a global disaster of the first order.

What’s problematic is that scientists use gravitational modeling to figure out the probability of impact, and the close pass to Earth again in 2028 might alter its path. Essentially, if 2024 YR4 passes through particular gravitational “keyholes” in 2028, the gravity of Earth might shift its orbit just enough to make an impact very likely.

The issue is that, unless the probability goes down soon by ruling out all the keyholes, we could be in a weird state where the probability just remains at ~1-3% for years, and that number feels so low, psychologically, that it’s not worth paying attention to (although some people on forums are arguing for as high as 6%). We’ll lose sight of the asteroid for a window somewhere around 2025-2027 completely, so if this chart is still high by then that would be… bad.

While I certainly wouldn't wish for a “through the keyhole” event, if it did happen, there would be the silver lining of immense amounts of international cooperation and technological advancement to divert it. If a deflection mission were planned, almost certainly it’d be a collaboration between Elon Musk’s SpaceX and government organizations like NASA and the ESA and the International Asteroid Warning Network. I’m well aware of the analogies to the movie Don’t Look Up, but well, such an inevitability would make sense if the universe consistently bends its arc toward rendering satire obsolete.

Personally, I always thought exactly this epistemic scenario was the flaw of Don’t Look Up. It would have been much more realistically funny in the movie if, instead of a 99% chance to destroy Earth from the beginning, the asteroid had a 41% chance. And everyone just dismisses it because that’s less than half. Anyway, we’ll see how humanity responds in real life to a lower chance of impact, if that holds, in the coming years. I’m hopeful. Relatively hopeful, that is.

2. Simon & Schuster, my own publisher, and one of the largest, has announced they no longer require authors to scrounge like begging worms for book blurbs anymore (it’s not like the publishers provide them, in case you didn’t know). Thank God. Or thank the new young head of their flagship imprint, Sean Manning. This change is long past due. It placed debut authors at a significant disadvantage, and, outside of a few very big names like Stephen King for horror or George R.R. Martin for fantasy, blurbs had basically no impact on sales ever.

3. Speaking of changes to old bad systems, the NIH announced they were cutting down indirect costs significantly, from 70% to 15%. While most non-scientists wouldn’t know what this means, this is a really big deal for how science works at a career level.

So what are “indirect costs?”

Basically, when I was at Tufts University, I was an assistant research professor. I mostly paid my own salary by getting grants. I applied for these grants, and then received the money which went to pay my salary (fixed independently of the amount I got, more just paid my salary over more years). It always sounded like a lot when I first applied. But then the university took a chunk, sometimes half or most of it—their “indirect cost.” Basically, indirect costs are the tax that universities levy on scientists for doing research under their roofs. So if you got a million dollar grant from the taxpayer for your research, the university took most of it.

Reducing indirect costs means that scientists themselves keep the lion’s share of the grants and funding they alone apply for and get, and avoids the situation where scientists grind for grants that then get used to pay for the new fancy gym for undergraduates. [Editorial note: As pointed out in the comments, it turns out that, while this is true for all the grants I’m used to getting, it’s not actually universal. There’s a class of big funders (including the NIH) for which indirect costs are negotiated afterward and therefore aren’t directly from scientist’s budgets at the point-of-grant received].

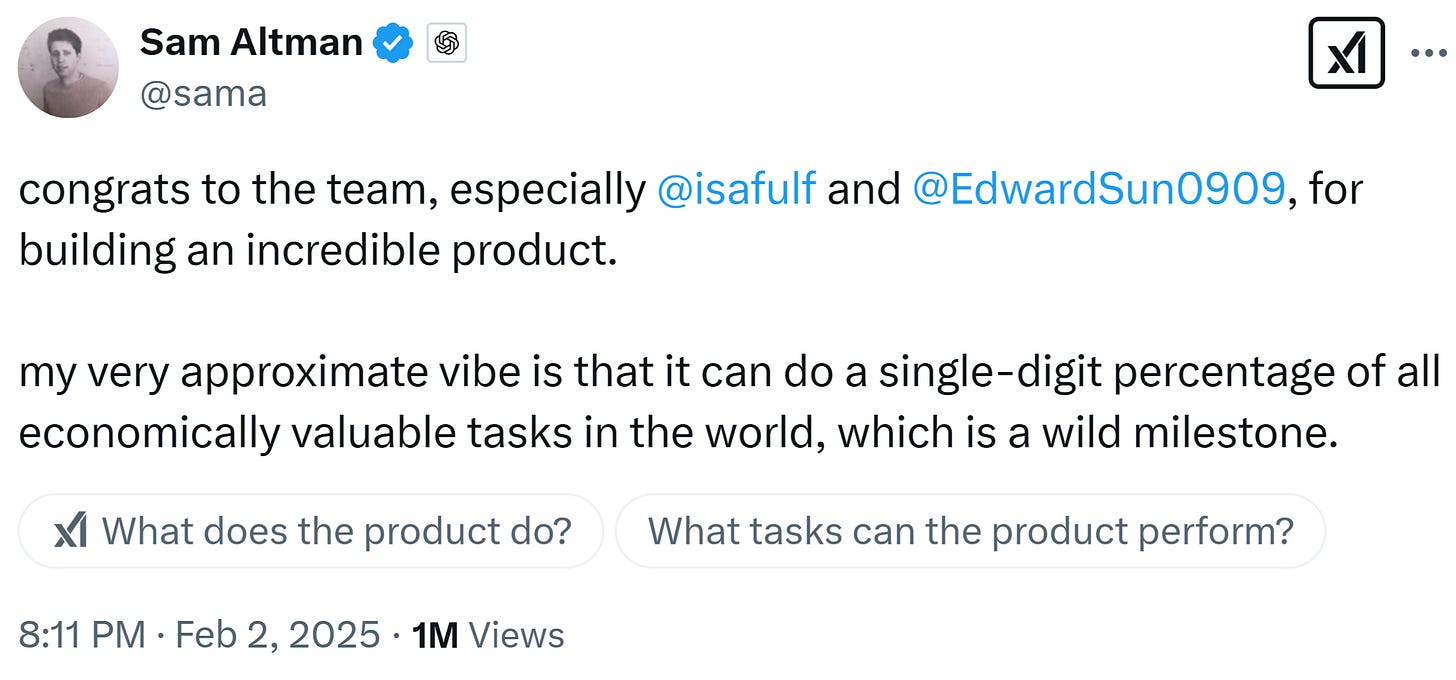

4. OpenAI released their new “Deep Research” tool. According to Sam Altman it can do somewhere between 1-10% of all economically valuable tasks in the world.

Economist and Marginal Revolution author Tyler Cowen was particularly impressed.

Others noted a large amount of hallucinations and mistakes for simple tasks, like when asked to list out all the NBA players (it only got 6 out of 30 teams correct). As usual, in my testing I was impressed by some aspects, but also noted a lot of times it just settled in on Wikipedia rewrites. There's already a huge amount of explainers that exist online for almost every subject, so it’s hard to tell the difference between impressive output and formulaic re-writes.

I did try to use Deep Research for the ultimate test for AGI, which is finding me the exact material equivalent of a mattress that is no longer on sale, one that is uniquely good for my back and that I’ve been avoiding getting rid of for years. Even with tags, pictures of the material, etc, Deep Research fell on its face and gave me a bunch of useless filler citations. It was no help at all.

5. Counterpoint: Nate Silver wrote a great post over at Silver Bulletin, “It's time to come to grips with AI,” in which he argues that, while AI wasn’t much of a factor in the 2024 election…

2024 was probably the last election for which this was true. AI is the highest-stakes game of poker in the world right now. Even in a bearish case, where we merely achieve modest improvements over current LLMs and other technologies like driverless cars, far short of artificial superintelligence (ASI), it will be at least an important technology. Probably at least a high 7 or low 8 on what I call the Technological Richter Scale, with broadly disruptive effects on the distribution of wealth, power, agency, and how society organizes itself. And that’s before getting into p(doom), the possibility that civilization will destroy itself or enter a dystopia because of misaligned AI. And so, it’s a topic we’ll be covering more often here at Silver Bulletin.

As much as I sometimes wish I could write about AI less, Nate is absolutely right: it’s by far the most important topic in the world right now, for better or worse, and that’s only going to become more obvious over the next few years. Some updated version of Deep Research will, one day, find that mattress for me.

6. Thankfully, scientific research is still done by smart humans! And I was recently amazed to see my name in a new paper in Nature (okay, in a Nature imprint, but still).

A Chinese team at the University of Beijing has been doing great work following up on my original theory of causal emergence. For those who only know me from my online writing, it’s a theory that allows scientists to measure emergence mathematically by identifying the most causally-relevant scales of systems. You can read more about it here and also in The World Behind the World.

This new paper, led by Jiang Zhang, explores an alternative to the measure of causation the theory first used, the effective information, using instead the dynamic reversibility. I really like the approach. They made use of a lot of the model systems from our original paper.

Their paper had great timing because, after taking almost two years off from science to just think and write and be as present as possible for my young kids, I finally have come up with some original scientific ideas worth pursuing again. So expect more from me soon in that department.

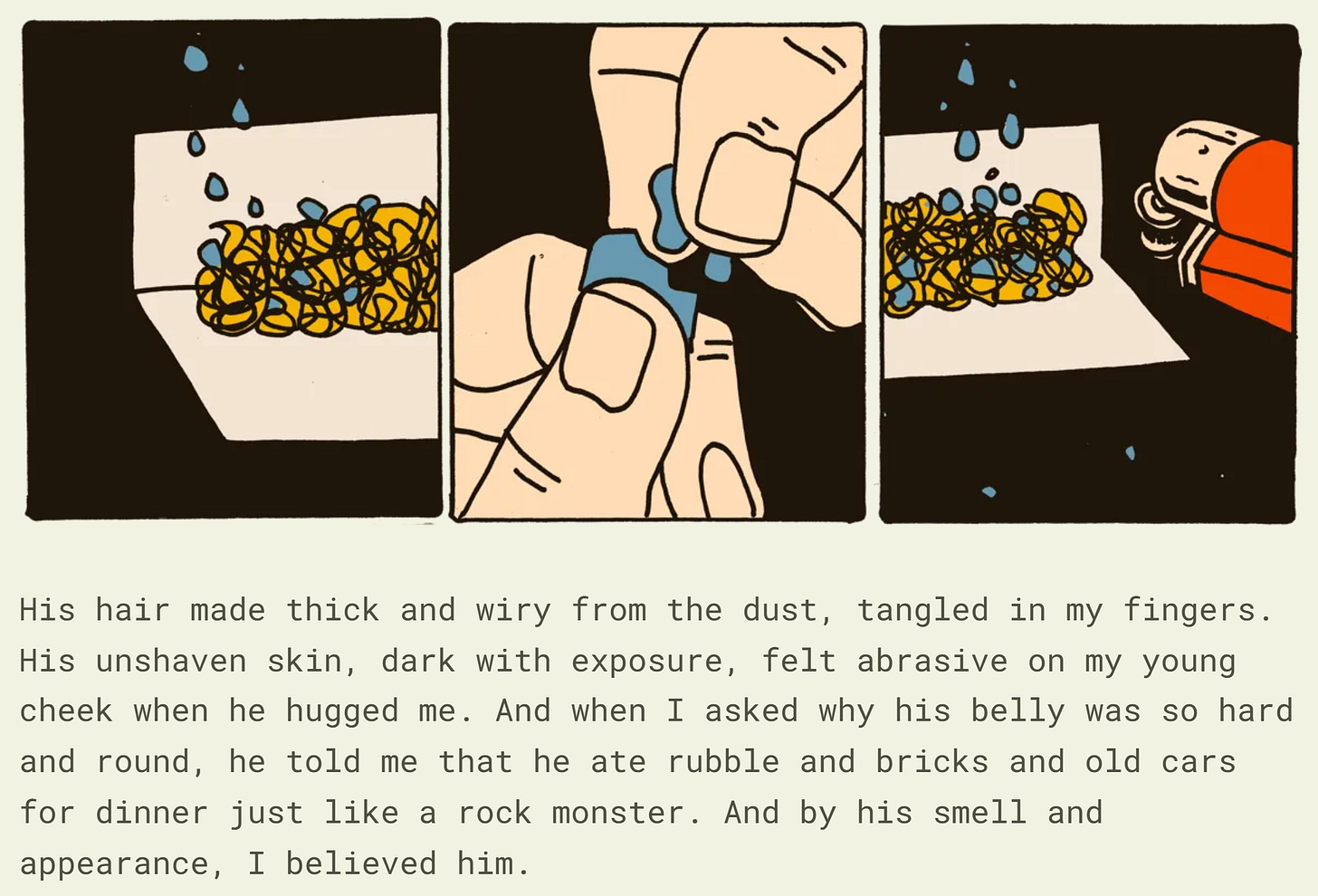

7. As you might have noticed at the beginning of the post, we have some new art from resident artist Alexander Naughton for this here Desiderata series. He made a huge collage of a lot of his previous artwork for TIP. Each entry I'll take samples from it to reveal more.

It's therefore a good time to remind everyone they can commission Alexander Naughton for art. If you are a magazine editor, or are in want of a new album cover, or have an idea for a children’s book or graphic novel, or need any sort of art at all, please do contact him.

He also has his own Substack, Illustrated, where he posts lovely comics and thoughtful essays on life and the creative process.

8. From the archives. Just in December I was arguing that the mass hysteria of New Jersey drones was nonsense. This has now been confirmed by official channels. It was airplanes and hobbyist drones and a small number of drone flights directly authorized by the FAA. On to the next hysteria!

9. As always for the Desiderata series, please treat this as an open thread. Comment and share whatever you’ve found interesting lately or been thinking about below, or ask any questions you might have.

Hi Erik, the information you provide about NIH indirects is incorrect. The indirects are not taken out of the funds that pay for the research, they are separate and pay for the infrastructure that supports the research. For example, if I propose a study that costs 1 million for the research (direct costs), the university receives an additional 500,000 to pay for the infrastructural and administrative costs (indirect costs) that support the research. Capping indirect costs at 15% will require universities to immediately fire staff since the change affects funds that have already been budgeted for the remainder of this fiscal year. The impact is not just on Ivy League schools, it’s on all universities (state and private) that support NIH research.

The possibility that the AI wrote research papers at a PhD level is only possible is Cowen has terrible PhD students. My prior on that is insanely low.