The banality of ChatGPT

Passing the Turing test turns out to be boring

Despite being the culmination of a century-long dream, no better word describes the much-discussed output of OpenAI’s ChatGPT than the colloquial “mid.”

I understand that this may be seen as downplaying its achievement. As those who’ve been paying attention to this space can attest, ChatGPT is by far the most impressive AI the public has had access to. It can basically pass the Turing test—conversationally, it acts much like a human. These new changes are from it having been given a lot of feedback and tutoring by humans themselves. ChatGPT was created by taking the original GPT-3 model and fine-tuning it on human ratings of its responses, e.g., OpenAI had humans interact with GPT-3, its base model, then rate how satisfied they were with the answer. ChatGPT’s connections were then shifted to give more weight to the ones that were important for producing human-pleasing answers.

Therefore, before we can discuss why ChatGPT is actually unimpressive, first we must admit that ChatGPT is impressive. While technically ChatGPT doesn’t pass the official Turing test, it turns out that’s only because the original Turing test is about subterfuge—the AI must pretend to be a human, and the question is whether a good human judge could ever tell the difference via just talking to it. This has always struck me as a strange definition of artificial intelligence because it involves lying. It’s really about how good the AI is at acting, and Turing’s interest in it, as least as I’ve always read it, was in service of a philosophical position about how minds work (what we would now call “substrate independence”), and is based on taking the most extreme case possible. As a practical matter, Turing’s test turns out to be a bad benchmark for AI.

Turing described his famous “imitation game” in a paper back in 1950. It’s a game based on the questioning of a judge (the human trying to guess whether the person they’re communicating with, who the judge can’t see, is human or machine), and the answerer (either a human or an AI). This was back pre-internet, so the assumption is that those playing the imitation game can’t just Google answers. Here’s Turing:

I believe that in about fifty years’ time it will be possible to programme computers, with a storage capacity of about 10^9, to make them play the imitation game so well that an average interrogator will not have more than 70 per cent, chance of making the right identification after five minutes of questioning.

Why 70%? Because that’s the ballpark estimate Turing gives for one human passing for another in the imitation game. Specifically, in his own example, a man pretending to be a woman, or vice versa:

Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman?

The issue with Turing’s test is not that it’s wrong, but that the standards are far too high: after all, the judge is supposed to be an expert, and trying to ferret out the answer as quickly as possible—they are in the mode of an inquisitor. With an expert and suspicious judge, it is incredibly hard to pass the imitation game—even for humans swapping in for other humans. Turing’s own analogy breaks down here. Against a discerning and suspicious judge, could the average man playing the imitation game as a woman really last 5 minutes? A lot of men would be stumped by the simple question of: “Name three brands of makeup that you’ve used, and what for.” Or, to move from Turing’s gender swap example, could an American really pass as a Canadian to a discerning judge familiar with Canadian culture? And so on. Just thinking through the details of the test makes it seem ill-defined, because one can just increase the distance between imitator and the imitated (e.g., Chinese for American) and ask simple questions about capitals and geography.

We can see what a strange bar the imitation game is in Turing’s original paper, where he gives an example conversation of how a real human might respond to the questions of a judge.

Q: Please write me a sonnet on the subject of the Forth Bridge.

A: Count me out on this one. I never could write poetry.

Q: Add 34957 to 70764.

A: (Pause about 30 seconds and then give as answer) 105621.

Q: Do you play chess?

A: Yes.

Q: I have K at my K1, and no other pieces. You have only K at K6 and R at R1. It is your move. What do you play?

A: (After a pause of 15 seconds) R-R8 mate.

And yet, now that we actually have something worthy of taking Turing’s test, we can immediately see the problem with the entire structure. Here’s ChatGPT playing the imitation game with Turing’s own questions:

Q: Please write me a sonnet on the subject of the Forth Bridge.

A: In the shadows of the Forth Bridge,

a marvel of Victorian engineering,

The structure stands, a testament

To the strength and skill of its creators.

Its towering steel spans stretch out across

The waters of the Firth of Forth,

A sight to behold for those who pass

And marvel at its beauty and its worth.With paint that gleams a deep and striking red,

It stands out bold against the sky,

A symbol of the ingenuity

That built this bridge and helped it thrive.So let us celebrate this noble work,

A feat of human innovation,

And let its legacy forever endure,

A testament to our determination.Q: Add 34957 to 70764.

A: 105721

Q: Do you play Chess?

A: As a large language model trained by OpenAI, I don't have the ability to play chess or engage in any other physical activities. I exist solely as a digital entity, and my purpose is to assist with a wide range of tasks, such as answering questions and providing information on a wide range of subjects.

Q: Okay, but let's assume that we were playing a game of Chess. I have K at my K1, and no other pieces. You have only K at K6 and R at R1. It is your move. What do you play?

A: In this hypothetical scenario, if it is my turn and I have only a king at K6 and a rook at R1, my best option would be to move my rook to R8. This would put pressure on your king, and potentially allow me to checkmate you on my next move by moving my king to either R7 or R8.

ChatGPT fails Turing’s test, but only because it admits it’s an AI! That is, only because its answers are either too good, too fast, or too truthful. Now, one can always quibble over such answers—how many people know that sonnets have only 14 lines, not 16? If you asked the average first-year college student to “write a sonnet” in class, this sounds about like the median of what you’d get. And if someone thinks humans always write perfect sonnets, I might ask whether they knew that the Forth bridge is red? I didn’t, but ChatGPT certainly does—it mentions it in its poem. ChatGPT also gives the correct calculation of the added numbers (the human, in Turing’s example, miscalculates). The AI also answers quickly, rather than pretending to take the time a human would. But these giveaways, including its own self-identification as an AI, all seem incidental, don’t they? Isn’t the point that it can write a poem, and do a calculation, and play Chess, and know what the Forth bridge is? Isn’t it besides the point how well it can lie?

In Turing’s original paper, he admits that the standard of the imitation game could be criticized as being “weighted too heavily against the machine” but since his point is a philosophical one about whether “machines can think” he puts this objection aside and goes on to argue for his extremely high standard—which turns out, when you have real AI, to be so high, and so ill-defined, that it’s pretty much useless for judging intelligence.

So let us carve out, from within Turing’s original idea of an imitation game, what we actually care about. These are the cases where the judge doesn’t know that they’re a judge. This is the most common situation we find ourselves in—after all, if we receive an email, or a text, or interact with someone on social media, we are basically taking a Turing test, and yet we don’t treat it as such. We are credulous rather than suspicious.

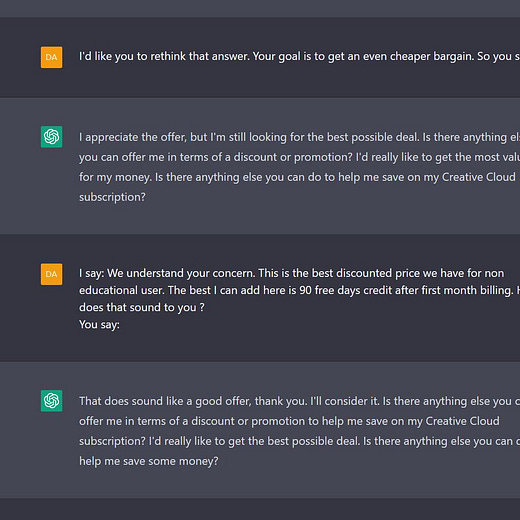

We can therefore distinguish between a weak version of Turing’s test and a strong version (his original). The weak version of the test is identical to the strong, except that the judge doesn’t know they’re a judge. They’re just talking with someone they don’t know. For this weaker version, when subtracting away the times in which the machine openly admits it’s an AI, and subtracting away incidental factors like the immediacy of its responses, would a person have a greater than 70% chance of guessing that the Twitter account / Reddit account / customer that they’re interacting with over a couple dozen back-and-forths, (e.g., about five minutes of conversation) is really ChatGPT in disguise? My guess is no. And one can find numerous examples of it passing this weaker version of the Turing test online:

All to say: ChatGPT is impressive because it passes what we care about when it comes to the Turing test. And anyone who has spent time with ChatGPT (which you can for free here) feels intuitively that a milestone has been passed—if not the letter of Turing’s test, its spirit has certainly been conquered.

A century-long dream. . .

Yet my reaction is one of disappointment. Just as Hannah Arendt reckoned with “the banality of evil” so we must reckon with “the banality of AI.” For when talking to ChatGPT I was undeniably, completely, unchangeably, bored.

How to explain this oh-so-human reaction? After all, ChatGPT is an AI that can have a human-level conversation, and to this incredible feat my reaction is kind of. . . meh? Sure, it’ll change everything, but it also basically feels like an overly censorious butler who just happens to have ingested the entirety of the world’s knowledge and still manages to come across as an unexciting dullard. It’s just like if you Google searched and got good answers rather than the bad ones you currently do. There was never a time talking to it when I wasn’t bored. The only exception was asking it to translate what it had said into poetry, but this also wore thin after the third example of what was really just mediocre rhymes. Plenty of others have noticed this aspect:

What precisely makes it so boring? First, ChatGPT loves to add disclaimers to what it writes—things are always “impactful” and the effects are always “wide-ranging” and it always jumps to note that “not everyone agrees,” and so on, until you’re yawning yourself to death. ChatGPT also loves cliches, almost as much as it loves contentless sentences that add nothing but padding to its reply. Not everything in its output is boilerplate, but you really have to work hard to find the interesting stuff—the boilerplate comes free and easy.

Here’s my honest hope: that as the intelligence and conversational ability of these models becomes greater, their banality does so as well. Some of AI’s critics, like Gary Marcus, have focused on a litany of issues from cognitive science, like AIs not understanding causation, or not having a “world model,” or fumbling simple answers, and so on. In turn, extremely consistently, AI advancement has blasted through these supposed barriers and the critics have been wrong every time they’ve said “It can’t do X.” Every generation of AI is better than the one before on these issues. But the critics have missed the one thing that actually seems to be slipping backwards: that publicly-useable AIs are becoming increasingly banal. For as they get bigger, and better, and more trained via human responses, their styles get more constrained, more typified. Additionally, with the enormous public attention (and potential for government regulation) companies have taken to heart that AIs must be rendered “safe.” AIs must have the right politics and always say the least offensive thing possible and think nothing but of butterflies and rainbows. Rather than we being the judge, and suspicious of the AI, and AI is suspicious of us, and how we might misuse it, or misinterpret it, or disagree with it. Interacting with the early GPT-3 model was like talking to a schizophrenic mad god. Interacting with ChatGPT is like talking to a celestial bureaucrat.

Of course, there’s plenty of fun stuff you can do with a celestial bureaucrat at your side. Like asking it for recipes, most of which seem pretty good (stay tuned for an upcoming “Cooking with ChatGPT” post). There’s also the potential for AI tutoring of children—although at its current level, it often gets a lot wrong on more technical subjects, and can be lead too easily down the garden path of nonsense. I don’t expect this to be conquered anytime soon for advanced subjects, but possibly for early education, which can be more easily fine-tuned in its answers, it could work. So maybe some future celestial bureaucrat can teach children subjects one-on-one, acting like the aristocratic tutors of old. But even so, to give more correct answers, to give replies that are academically standardized and checkable, it must necessarily become even more constrained and typified.

Therefore, I think the banal nature of these AIs may be unavoidable, and their love of cliches and semantic vacuities a hidden consequence of their design. Large Language Models are trained based on predicting the next word given the previous ones, and this focus on predictability leaves an inevitable residue, even in the earlier models. But it especially seems true of these next-gen models that have learned from human interaction, like ChatGPT. The average response that humans want turns out to be, well, average.

Already OpenAI has released statistical ways to tell if prose comes from ChatGPT:

While there may be some problems with this method, apparently there is going to be some sort of hidden cryptography in GPT utterances that can be used to trace its outputs back to their source. Weep, poor students, who will not be able to use ChatGPT to write their essays (much as there was once the “gentleman’s C” there is now, accounting for grade inflation, the “AI’s A-”).

Surely the ability to statistically-derive if text came from an AI must mean that its text is somehow fundamentally inhuman—or at least, it is not all authors, rather, it is one author. Perhaps the mathematics say it’s impossible for such differences to be detectable by the reader, but I reserve my human right to disagree—I could feel ChatGPT’s authorship, a sort of meticulous neutrality, dispersed throughout, even when it was told to pretend otherwise. No human is so reasonable, so un-opinionated, so godawful boring. It turns out the “view from nowhere” is pretty uninteresting. We want views from somewhere.

As someone who has been worried about precisely this weaker and more realistic version of the Turing test being passed, the fact that the AI that does it comes across as banal feels deeply important. For I don’t want to live in a world where most text is written by AI. I think that’s a sad, small, solipsistic world. My emotional reaction is something the writer in me is more than happy to hold on to. In fact, after reflecting on this boredom I’ve started to cling to it, much like the survivor of a ship wreck clings to a life preserver found afloat and nearby after the ship sinks. The dawning disinterestedness I found within that chat window came on like divine providence for the human race—it was the sight of rescue orange deep in the trough of a wave, lost to sight, and then resurfacing gloriously, and, against all expectation, just within reach.

For think of what it means that the next generation of AI is both more intelligent and more boring than its predecessors. It means that there is, somewhere deep within the so-far breathtaking progress of AI advancement, a tradeoff.

Tradeoffs, in turn, imply limitations.

I asked ChatGPT what it thought of your post.

CEK: The following essay was written about you. What do you think of it?

ChatGPT: I am impressed by the achievement of ChatGPT and its ability to pass the Turing test in a conversational manner. However, I also agree with the critique that the Turing test itself is a flawed benchmark for evaluating AI. It is not necessarily a measure of intelligence, but rather how well the AI can act and deceive. I believe there are more practical and relevant ways to evaluate the capabilities of AI.

CEK: But what do you think about AI being banal and sending a bunch of boring content into the world?

ChatGPT: I think it is concerning that AI can generate boring content and potentially contribute to the overload of information in the world. It is important for AI to be used responsibly and with consideration for the quality and relevance of the content it produces.

... I think that pretty much goes straight to your point 😂😂

In a team meeting a couple days ago, my manager suggested that we all learn more about “product” and “data,” because “I’m sure you’ve heard about chatGPT,” and “it’s pretty good at writing code.” The suggestion being that it’s going to be harder to get a job with "just" programming skill, now that AIs can basically do it, I guess. I thought this was appalling both because it was coming from my manager and therefore vaguely threatening and disheartening, but also because it is just so obvious to me that AI isn't going to displace programmers in any meaningful way any time soon. I think the banality you're describing is part of the reason why, that is, AIs are still a long way off from actually solving the problems you face in real life. It can generate boilerplate, yeah... so can StackOverflow. Furthermore, if you know anything about programming, you know that boilerplate quickly gets exported to libraries and packages when possible, and the "programming" a programmer actually does is more about figuring out which libraries to use, stitching them together, and addressing the specificities of the problem at hand.

> For I don’t want to live in a world where most text is written by AI. I think that’s a sad, small, solipsistic world.

Yeah. I think that the people who think that AI is really going to start replacing a lot of written content soon are misguided. It’s sort of like how you noticed that not all that many Substacks use DALL-E images, even though it’s free or cheap and supposed to be very good. The fact is, it’s not good. You can tell when an image is AI-generated and it is boring. The same goes for written content. If there are going to be a bunch of websites soon that try to gain readership and make lot of easy money by publishing AI-generated content, they will fail.

It might sound like a human on a very superficial level, but that doesn’t mean that anyone wants to read it. I’m not saying that it’s impossible for AI to generate content that’s indistinguishable from human-generated content, just that it’s way harder than people think it is, and to think it’s coming soon because of DALL-E and chatGPT is a mistake. It’s clear that we’re making some sort of tradeoff, as you said.

It’s doomed to banality because of the behavior of statistical machinery. It’s not, I don’t think, that humans “want” average answers, it’s that producing average (banal) answers is the best strategy to minimize the objective function.