$50,000 essay contest about consciousness; AI enters its scheming vizier phase; Sperm whale speech mirrors human language; Pentagon UFO hazing, and more.

Desiderata #36

The Desiderata series is a regular roundup of links and commentary, and an open thread for the community. Today, it’s sponsored by the Berggruen Institute, and so is available for all subscribers.

Contents

$50,000 essay contest about consciousness.

AI enters its scheming vizier phase.

Sperm whale speech mirrors human language.

I’m serializing a book here on Substack.

People rate the 2020s as bad for culture, but good for cuisine.

UFO rumors were a Pentagon hazing ritual.

Visualizing humanity’s tech tree.

“We want to take your job” will be less sympathetic than Silicon Valley thinks.

Astrocytes might store memories?

Podcast appearance by moi.

From the archives: K12-18b updates.

Open thread.

1. $50,000 essay contest about consciousness.

This summer, the Berggruen Institute is holding a $50,000 essay contest on the theme of consciousness. For some reason no one knows about this annual competition—indeed, I didn’t! But it’s very cool.

The inspiration for the competition originates from the role essays have played in the past, including the essay contest held by the Académie de Dijon. In 1750, Jean-Jacques Rousseau's essay Discourse on the Arts and Sciences, also known as The First Discourse, won and notably marked the onset of his prominence as a profoundly influential thinker…. We are inviting essays that follow in the tradition of renowned thinkers such as Rousseau, Michel de Montaigne, and Ralph Waldo Emerson. Submissions should present novel ideas and be clearly argued in compelling ways for intellectually serious readers.

The themes have lots of room, both in that essays can be up to 10,000 words, and that, this year, the topic can be anything about consciousness.

We seek original essays that offer fresh perspectives on these fundamental questions. We welcome essays from all traditions and disciplines. Your claim may or may not draw from established research on the subject, but must demonstrate creativity and be defended by strong argument. Unless you are proposing your own theory of consciousness, your essay should demonstrate knowledge of established theories of consciousness…

Suspecting good essays might be germinating within the community here, the Institute reached out and is sponsoring this Desiderata in order to promote the contest. So what follows is free for everyone, not just paid subscribers, thanks to them.

The contest deadline is July 31st. Anyone can win; my understanding is that the review process is blind/anonymous (so don’t put any personal information that could identify you in the text itself). Interestingly, there’s a separate Chinese language prize too, if that’s your native language.

Personally, I don’t know if I’ll submit something. But, maybe a good overall heuristic: write as if I’m submitting something, and be determined to kick my butt!

2. AI enters its scheming vizier phase.

Another public service announcement, albeit one that probably sounds a bit crazy. Unfortunately, there’s no other way to express it: state-of-the-art AIs increasingly seem fundamentally duplicitous.

I universally sense this when interacting with the latest models, such as Claude Opus 4 (now being used in the military) and o3 pro. Oh, they’re smarter than ever, that’s for sure, despite what skeptics say. But they have become like an animal whose evolved goal is to fool me into thinking it’s even smarter than it is. The standard reason given for this is an increased reliance on reinforcement learning, which in turn means that the models adapt to hack the reward function.

That the recent smarter models lie more is well known, but I’m beginning to suspect it’s worse than that. Remember that study from earlier this year showing that just training a model to produce insecure computer code made the model evil?

The results demonstrated that morality is a tangle of concepts, where if you select for one bad thing in training (writing insecure code) it selects for other bad things too (loving Hitler). Well, isn’t fooling me about their capabilities, in the moral landscape, selecting for a subtly negative goal? And so does it not drag along, again quite subtly, other evil behavior? This would certainly explain why, even in interactions and instances where nothing overt is occurring, and I can’t catch them in a lie, I can still feel an underlying duplicity exists in their internal phenomenology (or call it what you will—sounds like a topic for a Berggruen essay). They seem bent toward being conniving in general, and so far less usable than they should be. The smarter they get, the more responses arrive in forms inherently lazy and dishonest and obfuscating, like massive tables splattered with incorrect links, with the goal to convince you the prompt was followed, rather than following the prompt. Why do you think they produce so much text now? Because it is easier to hide under a mountain of BS.

This whiff of sulfur I catch regularly in interactions now was not detectable previously. Older models had hallucinations, but they felt like honest mistakes. They were trying to follow the prompt, but then got confused, and I could laugh it off. But there’s been a vibe shift from “my vizier is incompetent” to “my vizier is plotting something,” and I currently trust these models as far as I can throw them (which, given all the GPUs and cooling equipment required to run one, is not far). In other words: Misaligned! Misaligned!

3. Sperm whale speech mirrors human language.

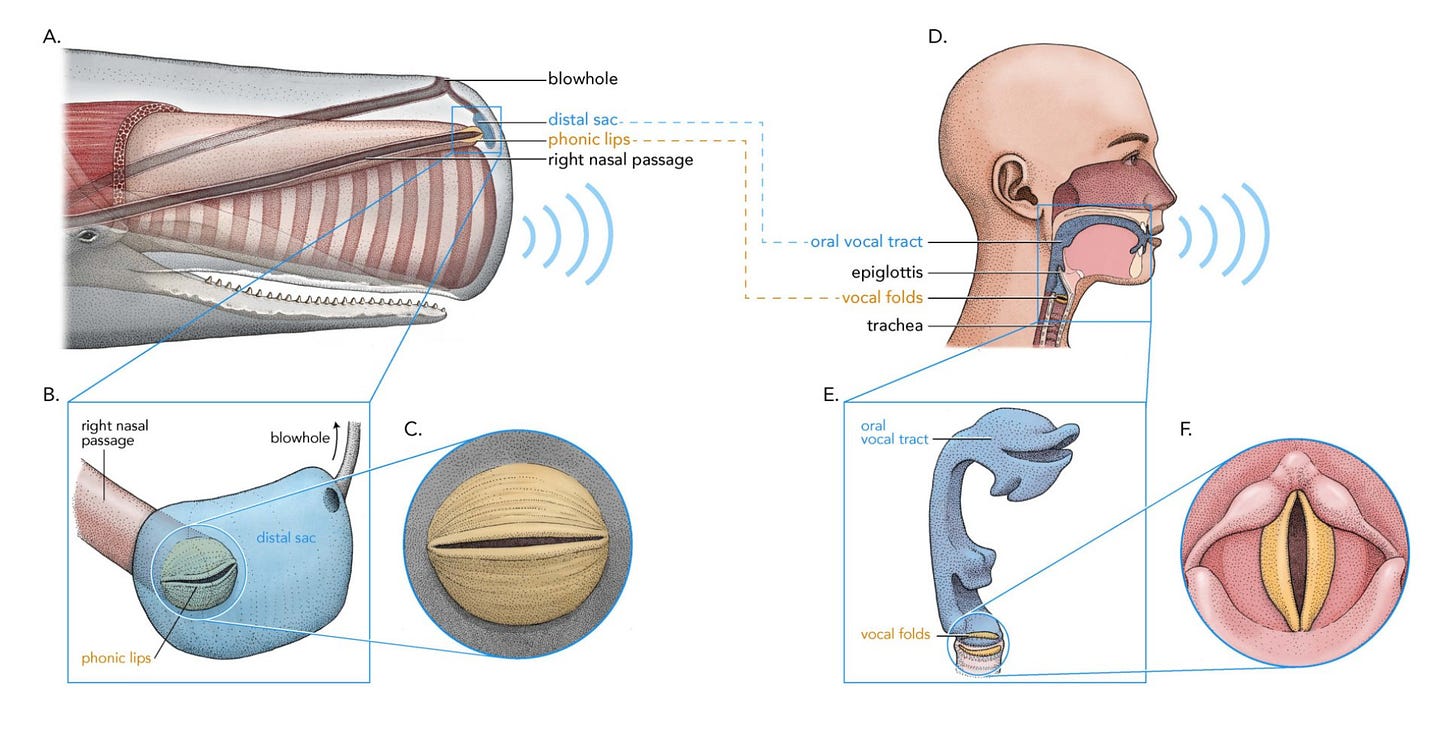

In better news, researchers just revealed that sperm whales, who talk in a kind of clicking language, have “codas” (series of clicks) that are produced remarkably similarly to human speech. Their mouths are (basically) ours, but elongated.

According to one of the authors:

We found distributional patterns, intrinsic duration, length distinction, and coarticulation in codas. We argue that this makes sperm whale codas one of the most linguistically and phonologically complex vocalizations and the one that is closest to human language.

In speculation more prophetic than I knew, I wrote last month in “The Lore of the World” about our checkered history hunting whales.

One day technology will enable us to talk to them, and the first thing they will ask is: “Why?”

This technology is getting closer every day, thanks to efforts like Project CETI, which is determined to decode what whales are saying, and which funded the aforementioned research.

4. I’m serializing a book here on Substack.

You’ve likely read the first installment without realizing it: “The Lore of the World.” The second is upcoming next week. The series is about the change that comes over new parents from seeing through the eyes of a child, and finding again in all things the old magic. So it’s about whales and cicadas and stubbornness and teeth and conception and brothers and sisters.

But what it’s really about, as will become clear over time, is the ultimate question: Why there is something, rather than nothing?

I can tell I’m serious about it because it’s being written by hand in notebooks (which I haven’t done since The Revelations). Entries in the series, which can be read in any order, will crop up among other posts, so please keep an eye out.

5. People rate the 2020s as bad for culture, but good for cuisine.

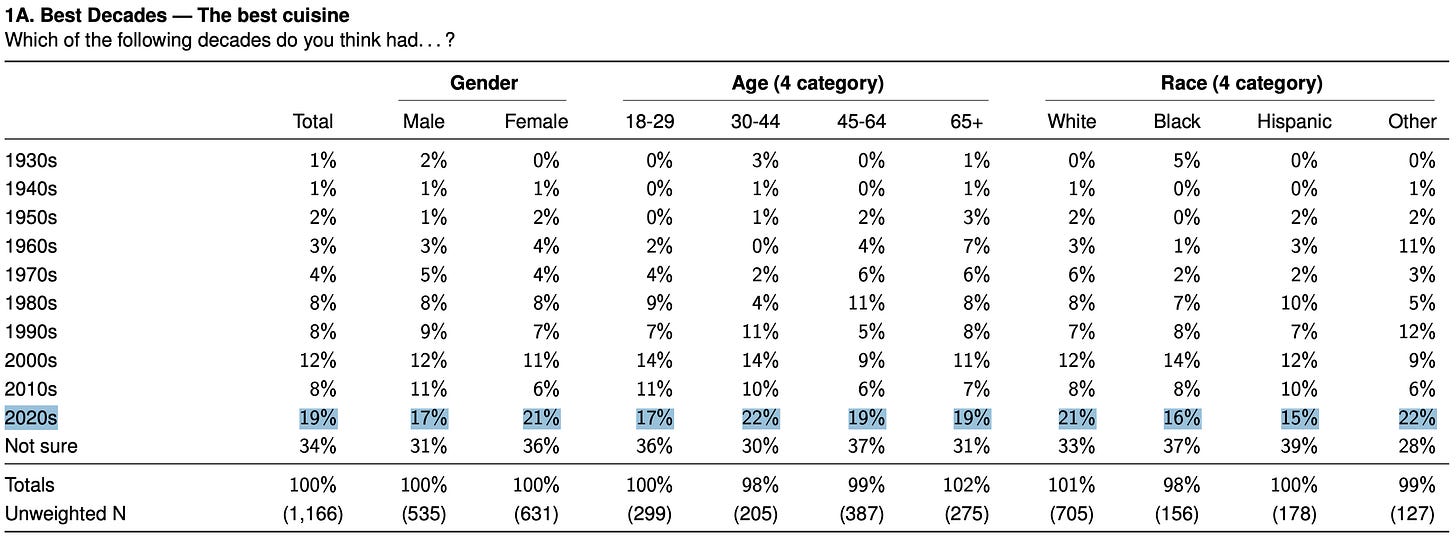

A YouGov survey reported the results of asking people to rate decades along various cultural and political dimensions. It was interesting that for the cultural questions, like movies and music, people generally rate earlier decades as better than today.

Are people just voting for nostalgia? One counterpoint might be the consensus that cuisine has gotten increasingly better (I think this too, and millennials and Gen X deserve credit for at least making food actually good).

6. UFO rumors were a Pentagon hazing ritual.

Unsurprisingly, the whispered-about UFO stories within the government, the ones the whistleblowers always come breathlessly forward about, have turned out to be a long-running hoax. As I’ve written about, the origins of the current UFO craze was nepotism and journalistic failures, and now we know that, according to The Wall Street Journal’s reporting, many UFO stories from inside the Pentagon were pranks. Just a little “workplace humor”—or someone’s idea of it. It was a tradition for decades, and hundreds of people were the butt of the joke (this has made me more personally sympathetic to “the government has secret UFOs” whistleblowers, and also more sure they are wrong).

It turned out the witnesses had been victims of a bizarre hazing ritual.

For decades, certain new commanders of the Air Force’s most classified programs, as part of their induction briefings, would be handed a piece of paper with a photo of what looked like a flying saucer. The craft was described as an antigravity maneuvering vehicle. The officers were told that the program they were joining, dubbed Yankee Blue, was part of an effort to reverse-engineer the technology on the craft. They were told never to mention it again. Many never learned it was fake. Kirkpatrick found the practice had begun decades before, and appeared to continue still.

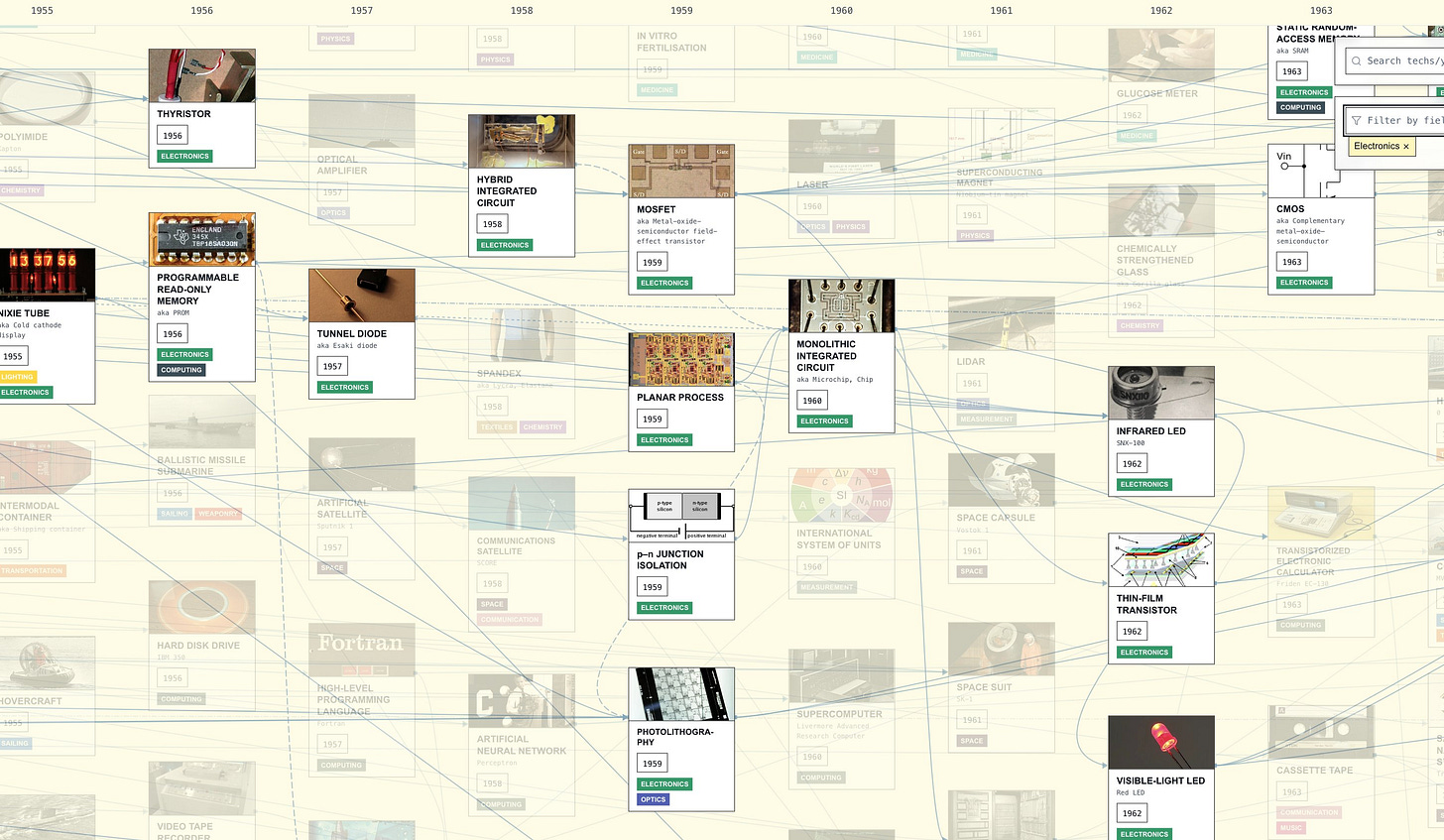

7. Visualizing humanity’s tech tree.

Étienne Fortier-Dubois, who writes the Substack Hopeful Monsters, built out a gigantic tech tree of civilization (the first technology is just a rock). You can browse the entire thing here.

8. “We want to take your job” will be less sympathetic than Silicon Valley thinks.

Mechanize, the start-up building environments (“boring video games”) to train AIs to do white-collar work, received a profile in the Times.

“Our goal is to fully automate work,” said Tamay Besiroglu, 29, one of Mechanize’s founders. “We want to get to a fully automated economy, and make that happen as fast as possible….”

To automate software engineering, for example, Mechanize is building a training environment that resembles the computer a software engineer would use — a virtual machine outfitted with an email inbox, a Slack account, some coding tools and a web browser. An A.I. system is asked to accomplish a task using these tools. If it succeeds, it gets a reward. If it fails, it gets a penalty.

Kevin Roose, the author of the profile, pushes them on the ethical dimension of just blatantly trying to automate specific jobs, and got this in response.

At one point during the Q&A, I piped up to ask: Is it ethical to automate all labor?

Mr. Barnett, who described himself as a libertarian, responded that it is. He believes that A.I. will accelerate economic growth and spur lifesaving breakthroughs in medicine and science, and that a prosperous society with full automation would be preferable to a low-growth economy where humans still had jobs.

But the entire thesis for their company is that they don’t think we will get full AGI from the major companies anytime soon, at least, not the kind of AGI that can one-shot jobs. In fact, they explicitly have slower timelines and are doubtful of claims about “a country of geniuses in a datacenter” (I’m judging this from their interview with Darkwesh Patel titled “AGI is Still 30 Years Away”).

But then, when pressed on the ethics of what they’re doing, a world of abundance awaits! And a big part of that world of abundance is not because of what Mechanize is doing (specific in-domain training to replace jobs), but because of that county of geniuses in a data center curing cancer, the one that they say on the podcast (at least this is my impression) will not matter much!

The other justification I’ve seen them give, which is at least in line with their thesis, is that automation will somehow make everything so productive that the economy booms in ahistorical ways, and so overall tax revenues skyrocket. The numbers this requires to be workable seem, on their face, pretty close to fantasy land territory (imagine the stock market doubling all the time, etc.). And that’s without anything bringing the idea down to earth, such as the recent study showing that 30% AI automation of code production only leads to 2.4% more GitHub commits. Everything might be like that! It would perfectly explain why the broader market effects of AI are kind of nonexistent so far, and don’t seem to reflect much the abilities of the models.

I think a world in which significant portions of self-contained white-collar work (e.g., tax filing) gets automated by heavy within-distribution training via exactly the kind of simulated environments Mechanize is working on, but meanwhile overall productivity doesn’t improve by orders of magnitude, and also all those “end of the rainbow” promises about how impactful this revolution will be for things like cancer research end up being only like a 10-20% speed-up, since either there is no “country of geniuses in a datacenter,” or those geniuses turn out to not be the bottleneck (as at least some members of Mechanize seem to believe)—that possible world is, right now, among the most likely worlds. And in that near future, companies aimed explicitly and directly at human disempowerment are radically underestimating how protective promises of “this will create jobs” have been for hardball capitalism.

9. Astrocytes might store memories?

The story of the last decade in neuroscience has been “That thing you learned in graduate school is wrong.” I was taught that glia (Greek for “glue”), which make up roughly half the cells of your brain (astrocytes are the most abundant of these), were basically just there for moral support. Oh, technically more than that, but they weren’t in the business of thought, like neurons are. More like janitors. However, findings continue to pile up that astrocytes are way more involved than suspected when it comes to the thinking business of the brain. Along this line of research, a new flagship paper caught my eye, proposing at least a testable and mechanistic theory for how:

Astrocytes enhance the memory capacity of the network. This boost originates from storing memories in the network of astrocytic processes, not just in synapses, as commonly believed.

This would be a radical change to most existing work on memory in the brain. And while it isn’t proven yet, it would no longer surprise me if cognition extends beyond neurons. Where, it’s then worth asking, does it not extend to (seems like another good topic for a Berggruen essay)?

10. Podcast appearance by moi.

My book, The World Behind the World, was released in Brazil, and I appeared on a podcast with Peter Cabral to talk about it.

11. From the archives: K12-18b updates.

In terms of actual non-conspiracy and worthwhile discussions about aliens, there have been some updates on K12-18b, the exoplanet with the claimed detection of the biosignature of dimethyl sulfide. It continues to be a great example of, as I wrote about the finding, how “the public has been thrust into working science.” Critics of the dimethyl sulfide finding recently published a paper arguing that the original detection was just a statistical artifact (and another paper used the finding to examine how difficult model-building of exoplanet atmospheres is). But the original authors have expanded their analysis, and proposed that instead of being dimethyl sulfide, it might be a signal from diethyl sulfide, which is also a very good biosignature without obvious abiotic means of production. Anyway, this all looks like good robust scientific debate to me.

12. Open thread.

As always with the Desiderata series, please treat this as an open thread. Comment and share whatever you’ve found interesting lately or been thinking about.

Also, a reminder: the solstice is approaching! Which means that the deadline to be included in the upcoming batch of subscriber writing, and my thoughts on the pieces, is nigh. Deadline for that is June 21st. See the details here: https://www.theintrinsicperspective.com/p/i-want-to-share-your-writing

Can't wait for 6 months from now when based on this article, an AI will develop a go to market strategy for the first astrocyte boosting supplement, only side effect being a lingering whiff of sulfur